Editorial

You’re Being Influenced by Bots More Than You Think

These days, opening a social media app feels less like joining a conversation and more like stepping into a room full of masked figures. Some masks hide real humans. Others hide much worse.

A 2025 large-scale study by social-cybersecurity researchers estimated that roughly 20% of social media chatter during major global events came from bots, with the remaining 80% from humans.

Many bots are designed by foreign actors to manipulate public opinion on highly contentious topics. One study found that nearly 15% of Tweets about Covid-19 posted in 2020 likely came from bots. These bots commonly posted misinformation with hashtags related to Trump, QAnon, vaccines, and China. Domestic agitators have also used bots to amplify Islamophobia and anti-semitism.

The goal of these bots is simple but incredibly destructive: they’re designed to warp the public conversation until we can’t tell what’s real. By flooding social media with misleading stories, recycled outrage, and old videos reframed as “breaking news,” bots make everyday Americans distrust institutions—police, elections, local governments, even each other. They amplify the most divisive narratives, push emotionally charged disinformation, and create the illusion that the country is angrier and more polarized than it actually is.

When people can’t agree on basic facts, democratic decision-making grinds down, and that’s exactly the outcome these campaigns are built to produce.

How Foreign Adversaries Are Using Bots Against Us

The foreign nations that deploy these bots do so to destabilize Western democracies and create openings for authoritarian governments to gain influence globally.

Russia has launched aggressive bot and troll operations to fracture support for NATO and Ukraine, and make Americans fight with each other instead of noticing Russia’s actions abroad.

China deploys armies of bots to suppress stories that make Beijing look bad, like the ethnic roundup of Uyghurs or protests in major cities against the 2022 lockdowns. When people on X/Twitter typed in “Uyghur” or city names in search of footage, they instead found sexual content with “Uyghur” or “Beijing” in the caption. This strategy of burying sources is called “flooding.”

Iranian actors create thousands of fake social media profiles that appear to be ordinary Americans, sometimes with AI-generated photos, to push viewpoints aligned with Iranian interests.

The governments running these bot campaigns all have one thing in common: tightly controlled, state-run media. Nothing gets published without approval from the top. In the U.S., we do the opposite: we protect free speech and rely on an independent press. It’s one of our greatest strengths as a democracy. But it also creates an opening our adversaries can exploit. They can flood our information ecosystem with propaganda, disinformation, and fake accounts, while we can’t respond in kind because they tightly restrict what their citizens are allowed to see. It’s an asymmetry they understand—and use—very well.

A Bot Built Just for You

Bot operations study Americans the way marketers study customers. They map out race, region, religion, age, political lean, and then craft fake accounts and messages to hit emotional pressure points.

In these cases, the “bots” aren’t exactly bots. They are what experts call “sock puppets” — real people pretending to be someone they are not. But they do work in tandem with automated bots. After this small number of provocateur “sock puppets” post the initial content, the automated bots dramatically scale it up through mass retweets and replies, creating a megaphone effect.

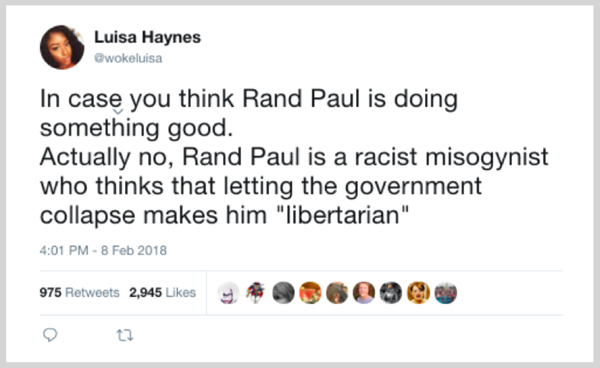

Sock puppets linked to Russia target Black voters by posting memes that portray the US as irredeemably racist, old videos of police brutality captioned to imply they had just happened, and messaging that says “your vote doesn’t matter” as both sides are the same. One such account called @WokeLuisa amassed 50,000 followers before X/Twitter traced it back to the Internet Research Agency (IRA) based in St. Petersburg, Russia.

Meanwhile, foreign political operators seeking influence—and domestic grifters chasing ad revenue—are creating MAGA sock puppets. They’ll find photos of a European model, photoshop a MAGA hat on her head, and post her with captions that say things like “I Follow Back All Patriots.” CNN and the Centre for Information Resilience (CIR) exposed dozens of these accounts by reverse image-searching the photos.

Why Aren’t Social Media Companies Stopping Bots?

Social media platforms know bots are destructive. They’ve known this. And yet the problem keeps growing like roots under a sidewalk, quietly breaking everything around it.

Companies do try to dam up the flood. Facebook deleted 3.3 billion fake users in 2023 alone. TikTok says they’ve deleted over 120 million bot accounts in the past 12 months.

Some speculate that social media platforms could crack down on bots more aggressively than they do, but the economics of engagement work against that. Bots boost activity, drive outrage, and keep users hooked. That means the very thing that makes bots harmful to democracy also makes them profitable for Big Tech.

How to Spot a Bot

So what can you do? You learn the tells. Bots tend to reveal themselves the same way bad magicians do: recycled punchlines, suspicious timing, and the inability to improvise when the audience throws them a curveball.

Start with the language. They overuse certain emotional phrases (“Wake up America!!!”) and avoid any real personal detail.

Look at the activity pattern. Real humans sleep, eat, run errands, sometimes remember to water their plants. Bots… don’t. If an account posts at all hours, across wildly different topics, with almost no variance in tone, you’re probably dealing with a digital ghost.

Check the followers, too. A real person almost never has 8 followers, all of whom were created in the same week and all of whom post the exact same memes with the exact same typos. Bots run with bot crowds.

Observe how the account reacts when you shift the tone. When you ask a real person a clarifying question (“Hey, what do you mean by that?”), they’ll respond with something distinct. A bot will either ignore you or repeat its talking point like a malfunctioning smoke alarm. They’re built to provoke, not converse. (Note: this will likely only work with automated bots, not sock puppet accounts.)

The truth is, we’re living through a strange new era in democracy, one where foreign influence operations don’t need to hack our voting machines. They just need to hack our attention. And our attention is increasingly captured by impersonators whose job is to sow distrust of neighbors we’ve never even met.

The responsibility falls on all of us to stay clear-eyed in a landscape flooded with fakes. The bots may be getting smarter, but so are we. And the more we recognize what’s real, the harder it becomes for bad actors—foreign or otherwise—to hijack our conversations and our democracy.

—Alex Buscemi (abuscemi@buildersmovement.org)

Art by Matthew Lewis

Keep Reading

You’re Being Influenced by Bots More Than You Think

Inside Texas’ Hidden Mental Health Crisis—Told by the People Living It